Summary

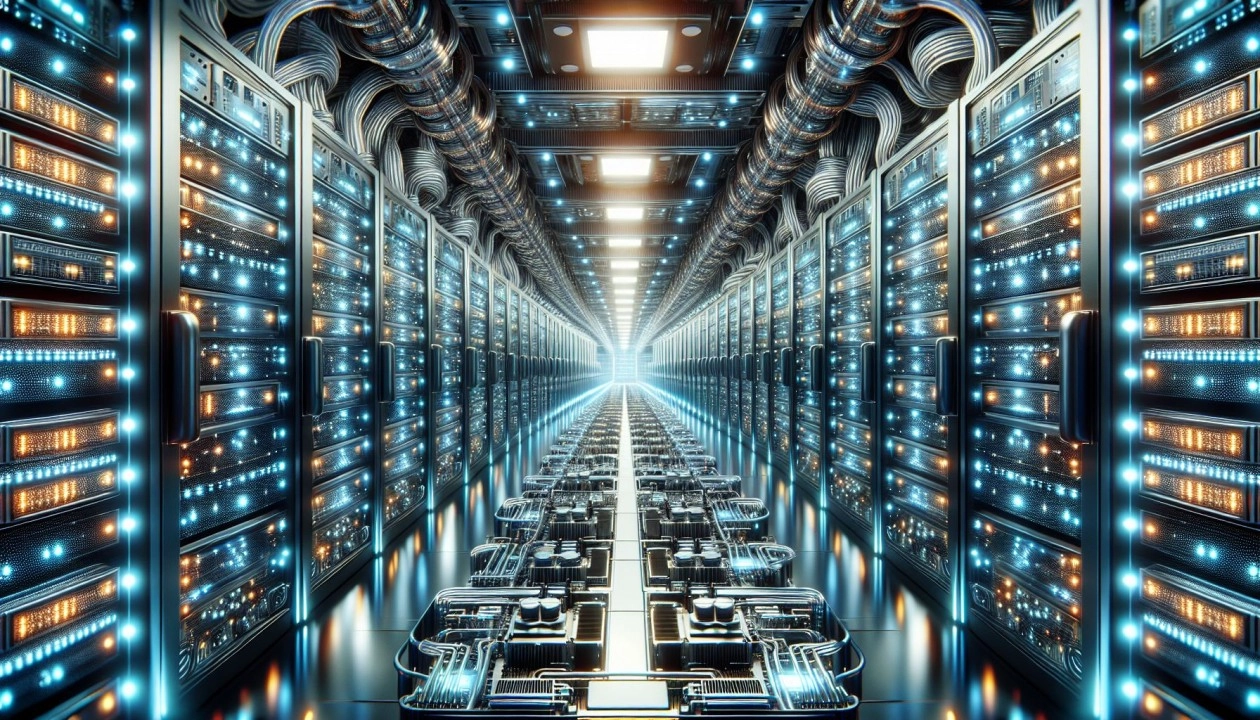

A new solution addresses HPC challenges by creating large-scale shared memory clusters for HPC and AI workloads. It provides improved performance, cost-efficient scalability, and low-latency interconnects, enabling massive computing systems with unified memory and thousands of cores.To address the challenges faced by traditional High-Performance Computing (HPC) systems, specifically in scaling memory and processing power efficiently while maintaining low latency and cost-effectiveness, a new solution is proposed. This solution focuses on enhancing high-performance computing (HPC) and AI workloads through large-scale shared memory clusters. This approach delivers improved performance, cost-efficient scalability, and a low-latency interconnect crucial for large memory applications. Ultimately, it enables the creation of massive computing systems operating under a single OS, capable of managing thousands of cores and terabytes of RAM.

Problem

Traditional high-performance computing (HPC) systems face challenges in scaling memory and processing power efficiently while maintaining low latency and cost-effectiveness

Impact

Enables massive computing systems under a single OS with thousands of cores and terabytes of RAM

Partners

Atos , Intel , AMD